Object detection is a computer vision-based artificial intelligence technique which has many practical applications, the typical object detection training workflow requires storing data in the cloud for centralized training, however, due to privacy concerns and the high cost of transmitting video data, it is highly challenging to build object detection models on centrally stored large training datasets following the current approach.

To decouple the need for machine learning from the need for storing large data in the cloud, a new machine learning framework called federated learning was proposed. Federated learning provides a promising approach for model training without compromising data privacy and security. Nevertheless, there currently lacks an easy to use tool to enable computer vision application developers who are not experts in federated learning to conveniently leverage this technology and apply it in their systems.

Today, we introduce an object detection Platform powered by Webank and Extreme Vision, to the best of our knowledge, this is the first real application of federated learning in computer vision-based tasks.

FedVision – An Online Visual Object Detection Platform powered by Federated Learning

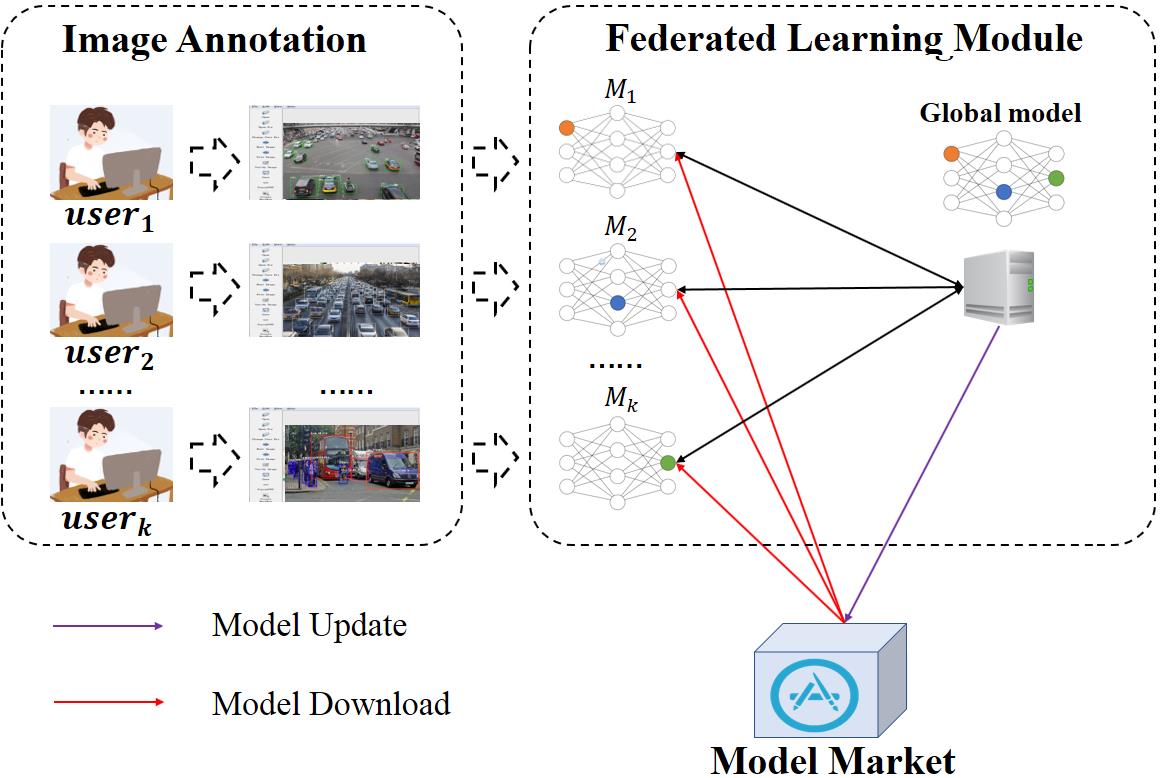

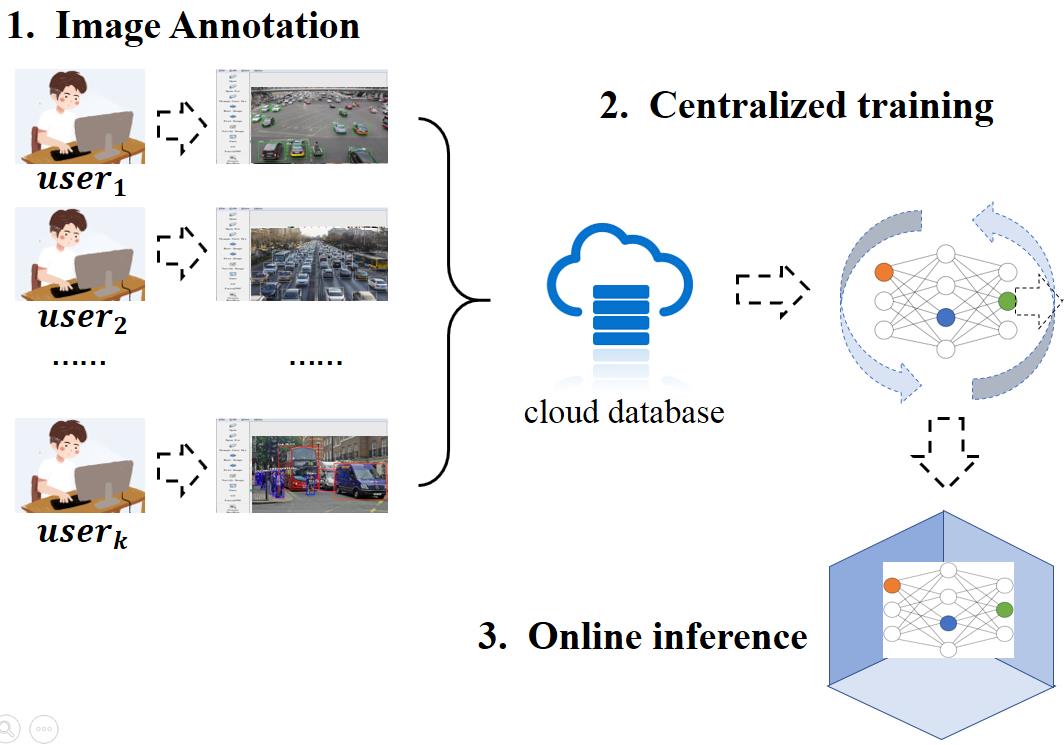

The different between traditional object detection workflow and our new FedVision workflow.

traditional object detection workflow.

FedVision workflow

Our FedVision workflow is as shown in Figure 1 (right). It consists of three main steps: 1) crowdsourced image annotation, 2) federated model training; and 3) federated model update.

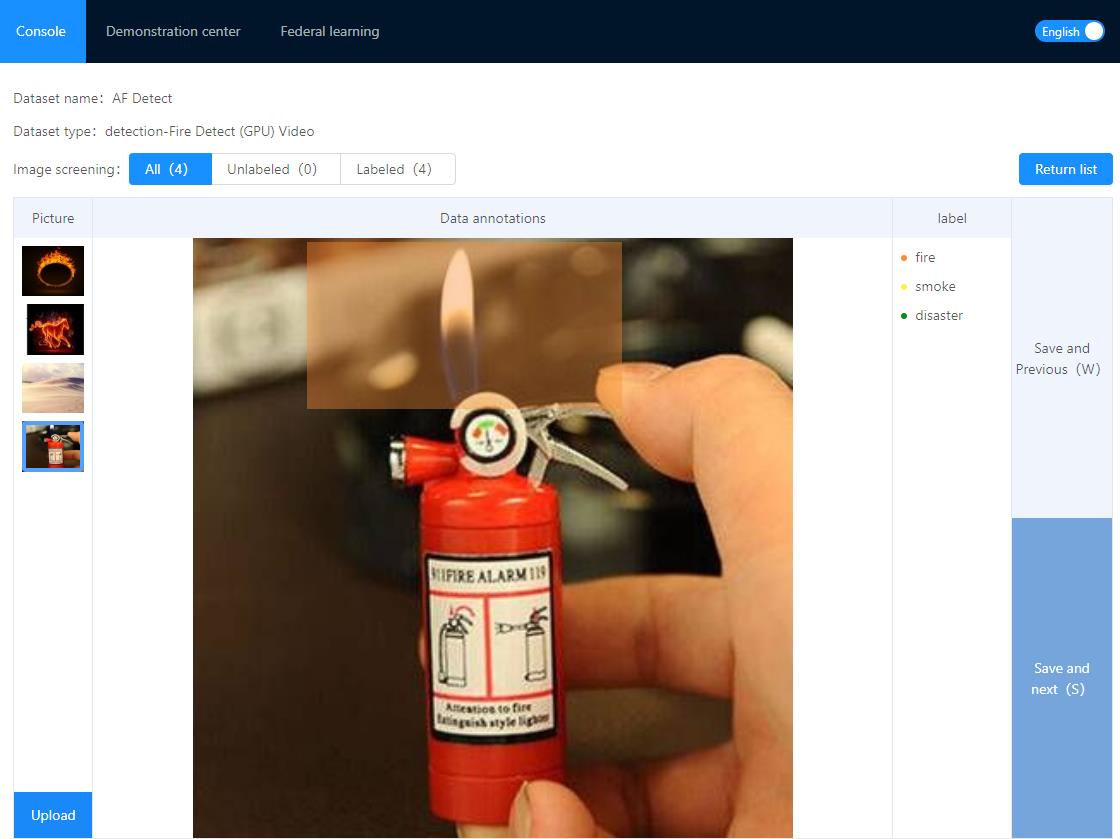

- Crowdsourced Image Annotation

This module is designed for data owners to easily label their locally stored image data for FL model training. A user can annotate a given image on his local device by using the interface provided by FedVision, as illustrated in Figure 2:

- Federated Model Training

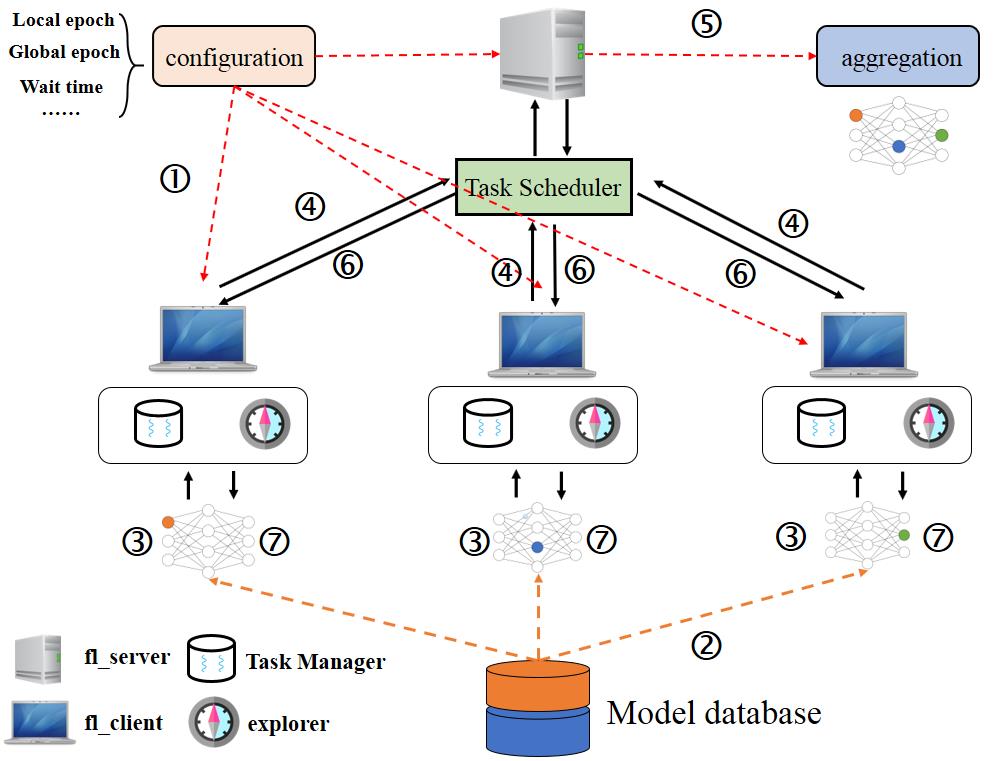

From a system architecture perspective, the federated model training module consists of the following six components:

1. Configuration: it allows users to configure training information, such as the number of iterations, the number of reconnections, the server URL for uploading model parameters and other key parameters.

2. Task Scheduler: it performs global dispatch scheduling which is used to coordinate communications between the federated learning server and the clients in order to balance the utilization of local computational resources during the federated model training process. The load balancing approach is based on which jointly considers clients’ local model quality and the current load on their local computational resources in an effort to maximize the quality of the resulting federated model.

3. Task Manager: when multiple model algorithms are being trained concurrently by the clients, this component coordinates the concurrent federated model training processes.

4. Explorer: this component monitors the resource utilization situation on the client side (e.g., CPU usage, memory usage, network load, etc.), so as to inform the Task Scheduler on its load-balancing decisions.

5. FL SERVER: this is the server for federated learning. It is responsible for model parameter uploading, model aggregation, and model dispatch which are essential steps involved in federated learning.

6. FL CLIENT: it hosts the Task Manager and Explorer components and performs local model training which is also an essential step involved in federated learning.

Object Detection:

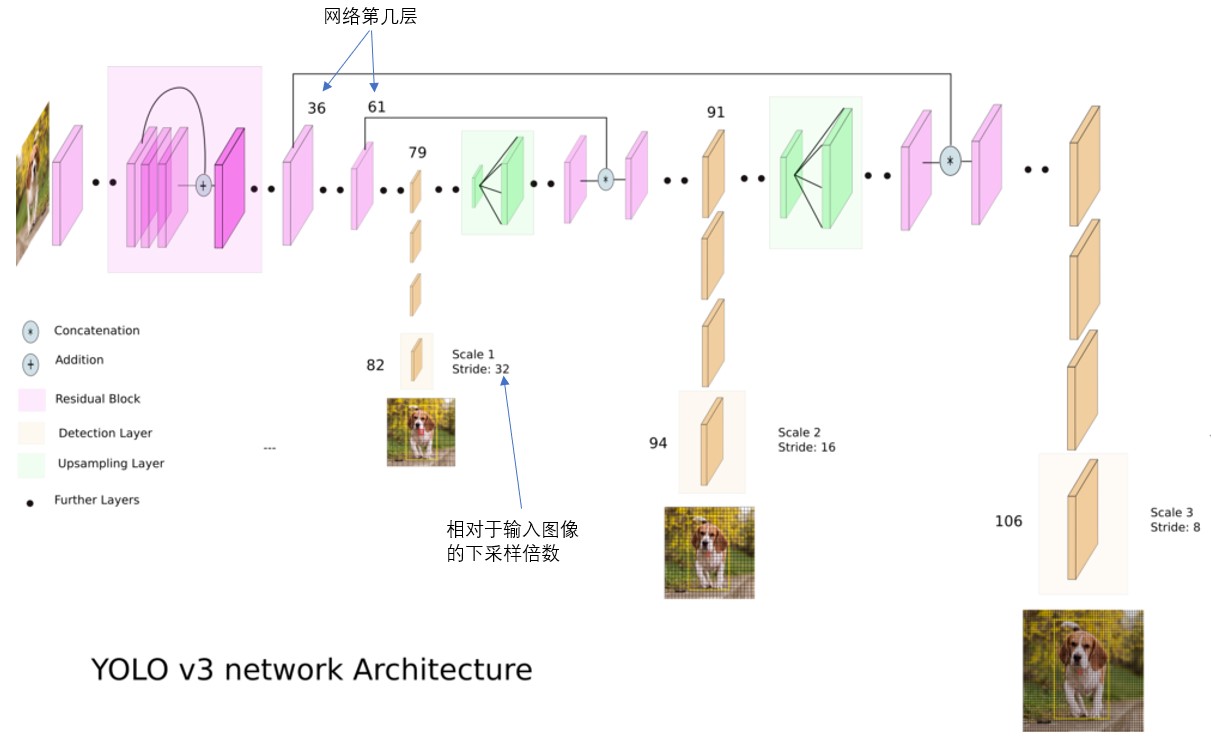

Object detection algorithm is the core of our FedVision platform, object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class in digital images and videos. In the past decade, there are many research topics are focus on that, and achieve unprecedented success in many commercial applications, take YOLOv3 as an example, YOLOv3 Architecture is as shown in Figure 7.

Figure 6: Flame detection with YOLOv3.

The approach of YOLOv3 can be summarized as follows: Given an image, such as the image of a flame as shown in Figure 6, it is first divided into an s*s grid with each grid being used for detecting the target object with its centre located in the given grid (the blue square grid in Figure 6 is used to detect flames). For each grid, the algorithm performs the following computations:

- Predicting the positions of B bounding boxes. Each bounding box is denoted by a 4-tuple (x,y,w,h), where (x,y) is the coordinate of the centre, and (w,h) represent the width and height of the bounding box, respectively.

- Estimating the confidence score for the B predicted bounding boxes. The confidence score consists of two parts: 1) whether a bounding box contains the target object, and 2) how precise the boundary of the box is. The first part can be denoted as p(obj). If the bounding box contains the target object, then p(obj) = 1; otherwise, p(obj) = 0. The precision of the bounding box can be measured by its intersection-over-union (IOU) value, with respect to the ground truth bounding box. Thus, the confidence score can be expressed as θ= p(obj)*IOU.

- Computing the class conditional probability, p(c_ij |obj)∈[0,1], for each of the C classes.

Figure 7: YOLOv3 Architecture.

Federated Learning:

In order to understand the federated learning technologies incorporated into the FedVision platform, we introduce the concept of horizontal federated learning (HFL). HFL, also known as sample-based federated learning, can be applied in scenarios in which datasets share the same feature space, but differ in sample space (Figure 4). In other words, different parties own datasets which are of the same format but collected from different sources. HFL is suitable for the application scenario of FedVision since it aims to help multiple parties (i.e. data owners) with data from the same feature space (i.e. labelled image data) to jointly train federated object detection models. The word “horizontal” comes from the term “horizontal partition”, which is widely used in the context of the traditional tabular view of a database (i.e. rows of a table are horizontally partitioned into different groups and each row contains the complete set of data features). We summarize the conditions for HFL for two parties, without loss of generality, as follows.

where the data features and labels of the two parties, (Xa;Ya) and (Xb;Yb), are the same, but the data entity i-dentifiers Ia and Ib can be different. Da and Db denote the datasets owned by Party a and Party b, respectively.

Under HFL, data collected and stored by each party are no longer required to be uploaded to a common server to facilitate model training. Instead, the model framework is sent from the federated learning server to each party, which then uses the locally stored data to train this model. After training converges, the encrypted model parameters from each party are sent back to the server. They are then aggregated into a global federated model. This global model will eventually be distributed to the parties in the federation to be used for inference in subsequent operations.

Skip to content

Skip to content